This will walk you through installing the Nvidia GPU kernel module and CUDA drivers on a docker container running inside of CoreOS.

Launch CoreOS on an AWS GPU instance

Launch a new EC2 instance

Under “Community AMIs”, search for ami-f669f29e (CoreOS stable 494.4.0 (HVM))

Select the GPU instances: g2.2xlarge

Increase root EBS store from 8 GB –> 20 GB to give yourself some breathing room

ssh into CoreOS instance

Find the public ip of the EC2 instance launched above, and ssh into it:

1

| |

Run Ubuntu 14 docker container in privileged mode

1

| |

After the above command, you should be inside a root shell in your docker container. The rest of the steps will assume this.

Install build tools + other required packages

In order to match the version of gcc that was used to build the CoreOS kernel. (gcc 4.7)

1 2 | |

Set gcc 4.7 as default

1 2 3 | |

Verify

1

| |

It should list gcc 4.7 with an asterisk next to it:

1

| |

Prepare CoreOS kernel source

Clone CoreOS kernel repository

1 2 3 | |

Find CoreOS kernel version

1 2 | |

The CoreOS kernel version is 3.17.2

Switch correct branch for this kernel version

1 2 | |

Create kernel configuration file

1

| |

Prepare kernel source for building modules

1

| |

Now you should be ready to install the nvidia driver.

Hack the kernel version

In order to avoid nvidia: version magic errors, the following hack is required:

1

| |

I’ve posted to the CoreOS Group to ask why this hack is needed.

Install nvidia driver

Download

1 2 3 | |

Unpack

1 2 3 | |

Install

1 2 | |

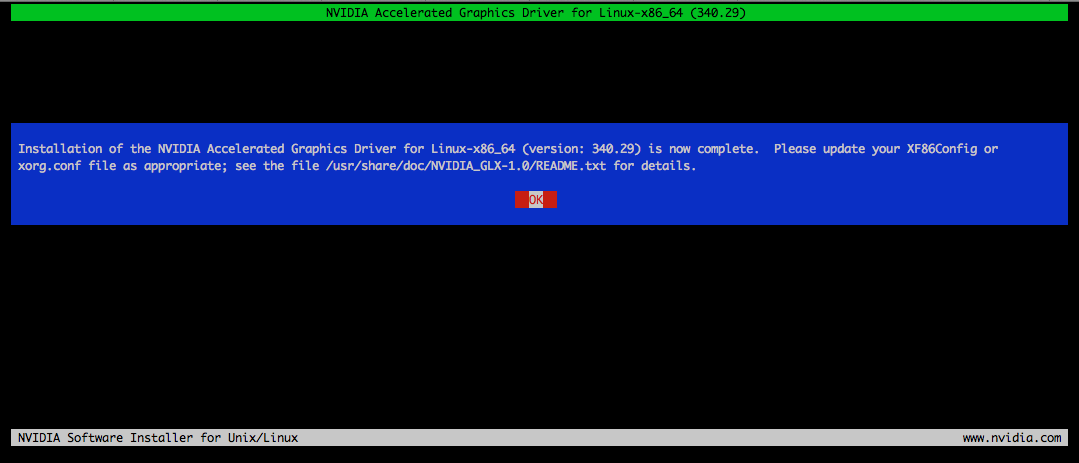

Installer Questions

- Install NVidia’s 32-bit compatibility libraries? YES

- Would you like to run nvidia-xconfig? NO

If everything worked, you should see:

your /var/log/nvidia-installer.log should look something like this

Load nvidia kernel module

1

| |

No errors should be returned. Verify it’s loaded by running:

1

| |

and you should see:

1 2 | |

Install CUDA

In order to fully verify that the kernel module is working correctly, install the CUDA drivers + library and run a device query.

To install CUDA:

1 2 | |

Verify CUDA

1 2 3 | |

You should see the following output:

1 2 | |

Congratulations! You now have a docker container running under CoreOS that can access the GPU.

Appendix: Expose GPU to other docker containers

If you need other docker containers on this CoreOS instance to be able to access the GPU, you can do the following steps.

Exit docker container

1

| |

You should be back to your CoreOS shell.

Add nvidia device nodes

1 2 3 | |

Verify device nodes

1 2 3 4 | |

Launch docker containers

When you launch other docker containers on the same CoreOS instance, to allow them to access the GPU device you will need to add the following arguments:

1

| |